Dive in to D-Wave

The D-Wave SPAC post

Since we’re talking about SPACs again, this is my reminder that nothing you read here is financial advice.

After writing a draft of this piece, I did end up taking a small long position in $XPOA which will convert to the D-Wave ticker $QBTS if the merger goes through.

You know the rules, another quantum computing SPAC, another post from The Quantum Observer. This time it’s D-Wave, which is ancient in QC terms, having existed since 1999. If you ignore some giant asterisks, D-Wave has had at least 128 qubits in a processor since 20111.

As a certified Quantum Computing Opinion Haver, I watched the D-Wave investor webcast2 to generate my scorching hot takes. It was genuinely interesting, and sometimes amusing, plus it’s short so you should go watch it yourself!

Technical

D-Wave’s CEO, Alan Baratz, reaffirmed their commitment to optimization problems, and therefore to annealing architectures. Based on Boston Consulting Group numbers, Baratz claims 1/3 of the total TAM for quantum computing is in optimization. He further claims that It Has Been Shown that gate model implementations of optimizers squander their speedups due to massive classical overhead, so annealers have the advantage here3. Baratz also states that, due to this overhead issue, that they have ‘no competitors in the optimization sector’. I would definitely take this one with a grain of salt, because I expect there to be a lot of caveats when it comes to speed-ups existing or not-existing for certain problems.

D-Wave also plans to be rolling out new annealing systems every few years with larger qubit counts and other upgrades, even as they work on a gate model architecture as well. One such upgrade is higher coherence, which was part of the schtick of IARPA's QEO program. I don’t recall any definitive empirical results that high coherence qubits for annealers provided a clear advantage, so I am not entirely sure what motivated this upgrade on D-Wave’s part. It might just be a natural consequence of gate-model compatibility.

And, of course, D-Wave did announce that they’d be pursuing gate-model quantum computing as well4. In the webcast they cite their legitimately impressive array of IP in superconducting electronics, in the same league as IBM, Google, Intel, and, somehow, Northrop Grumman. Additionally, D-Wave does operate their own fab, and have a huge amount of institutional circuit design, calibration, and test knowledge. These are all great reasons not to dismiss D-Wave’s foray into gate model QC. Sure quantum annealing qubits have been relatively low coherence to date, but calibrating 5,000 such qubits with individual flux control is non-trivial.

One pretty shocking statement from Baratz is that D-Wave will be fabricating qubits in their multilayer process to achieve high qubit densities. He implies that no one else can do this, but realistically it’s probably more true that no-one else chooses to do this. Why? Well, generally the dielectrics used in multilayer processes like this totally suck and destroy qubit coherence.

The reason D-Wave’s competitors fabricate qubits in a planar process on high quality crystalline wafers is precisely for coherence reasons. They are chasing density and scale through flip-chip assemblies, where high-density wiring appears on the non-qubit chip5. The ubiquity of flip chip processes and the incredible difficulty of solving the dielectric problem likely explains why no one else is pursuing this idea. It’s a huge technical risk, but if they can some how squeeze enough coherence out of their dielectric to make a plausible quantum computer, that technology could end up being a nice moat.

Financials

Much of the remainder of the presentation is spent on ‘real problems’ that D-Wave machines are solving for real companies. And, I guess, fine. Whatever. I have opinions on Real World Problems, and I’ll believe that D-Wave’s machines are the real deal when companies are paying real money to get access, not just $1M per year.

The actually interesting financial tidbit involves the mechanics of the SPAC. As you may know, only the PIPE6 portion of the funding is guaranteed, that’s $40M in this case. The remainder of the funding, $300M, is contingent on how many of the SPAC shareholders choose to redeem their cash instead of receiving shares. High redemptions could put the D-Wave business plan (below) in serious danger.

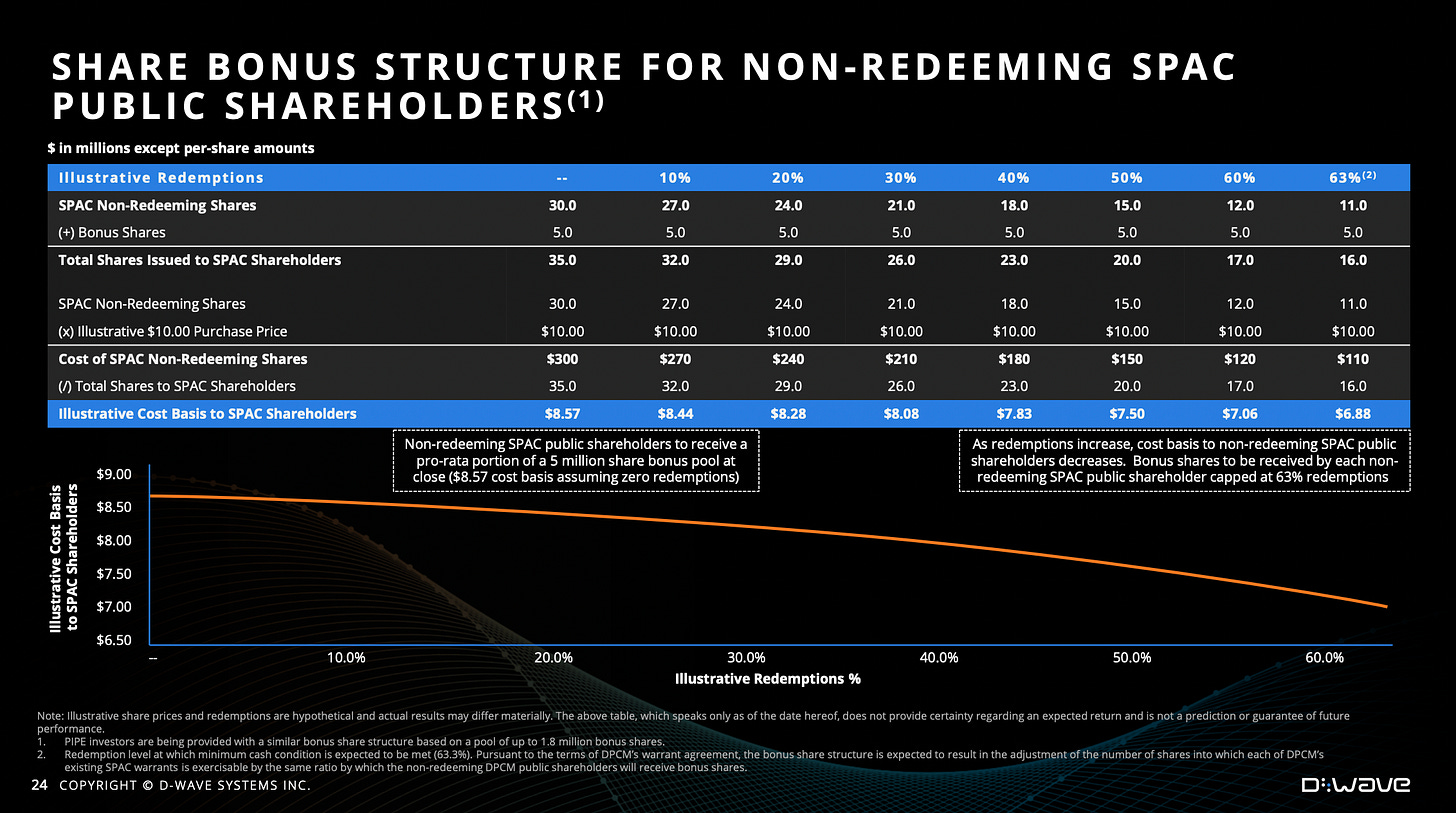

As you can see, the full SPAC funding amount of $340M gives D-Wave something like 3-4 years of runway before they expect to be profitable. To improve the chances of receiving all $300M, this SPAC includes 5 million bonus shares, that are distributed to non-redeeming shareholders, effectively reducing the initial per-share cost basis from $10 to, at most, $8.57 at zero redemptions. More redemptions means an even steeper discount for shareholders who choose not to redeem. This is a pretty neat trick to burn down the funding risk to D-Wave.

Conclusion

Ok, with all of this in mind, what is the final word? Well, one thing I can say is that I totally disagree with Paul Smith-Goodson’s take on D-Wave:

I think D-Wave has shown that, if nothing else, they are have strong experience and expertise at qubit integration into large systems, they have scalable control for these systems, and know how to calibrate them. Of course, their qubits are much different than what would be required for gate-model, but I believe the expertise they do have will be transferrable when it comes to control and integration of gate model qubits into big systems.

To say that D-Wave is far behind in any real sense fails to appreciate the sheer technological gap between ~100 qubits and 1,000,000 qubits. I think it’s pretty obvious that, in the journey to 1 Million Qubits, 1 qubit is practically the same as 100. If I had to bet on only one SPACing superconducting qubit startup, I’d take D-Wave over Rigetti.

You shouldn’t ignore the asterisks, but it is a funny thing to state.

CITATION NEEDED. If you know what result Baratz is referring to, please let me know!

Discussed in a previous post.

You can see an example of this in a previous post about the Rigetti SPAC.

The "squandered advantage" in optimization refers to this paper by Google, I would've thought:

https://arxiv.org/abs/2011.04149

Where Grover-like speedups are swamped by constant-factor overheads from both FT algos and error correction.